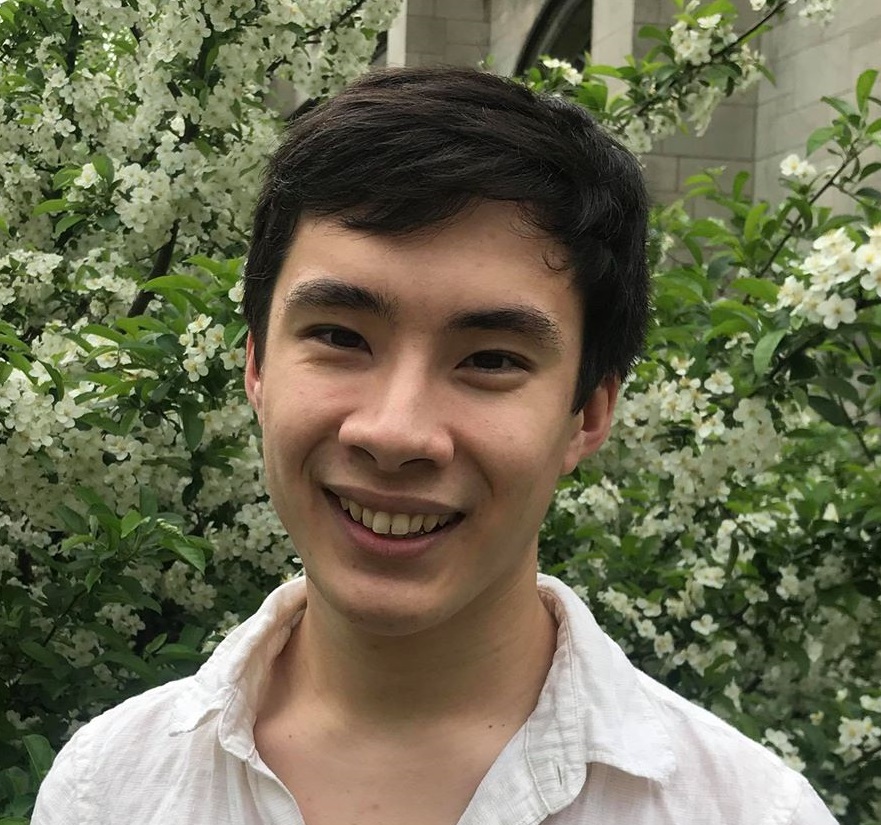

Michael Hanna

Hosted on GitHub Pages — Theme by orderedlist

I’m Michael Hanna, a PhD student at the ILLC at the University of Amsterdam, supervised by Sandro Pezzelle and Yonatan Belinkov, through ELLIS. I’m interested in interpreting and evaluating NLP models by combining techniques from diverse fields such as cognitive science and mechanistic interpretability.

News

- January 2026: A new paper with Emmanuel Ameisen, studying the latent planning abilities of LLMs using transcoder circuits, has been accepted to ICLR 2026!

- October 2025: I am excited to have received a Google PhD Fellowship in NLP! Interested in applying? Feel free to peek at my application materials here.

- August 2025: New paper on understanding the formal-functional divide using component circuits will appear in Computational Linguistics!

- May 2025:

- My Anthropic Fellows partner Mateusz Piotrowski and I have released circuit-tracer, a library for transcoder circuit finding in open-source LLMs! Try it out on Neuronpedia - it only takes a few seconds. Thanks a bunch to Emmanuel Ameisen and Jack Lindsey for supervising, as well as Johnny Lin for making the Neuronpedia integration go so smoothly.

- Many colleagues and I have a paper accepted to ICML 2025 on a new Mechanistic Interpretability Benchmark, for both causal graph and circuit identification!

- Another big group paper on LLM judges has been accepted to ACL 2025!

- March 2025: I’m in Berkeley until September as an Anthropic Research Fellow!

- January 2025: New paper accepted to NAACL 2025, coauthored with Aaron Mueller on using sparse autoencoders to understand how language models incrementally process sentences!

- December 2024: My colleagues and I have released short videos explaining basic concepts in interpretability! My advisor, Sandro Pezzelle, and I talk about circuits; check out the video here!

- September 2024: New paper, led by Curt Tigges, accepted to NeurIPS 2024, and appeared as an oral at RepL4NLP2024 at ACL!

- July 2024: New paper on faithfulness in circuit-finding will appear at COLM and as a spotlight at the ICML 2024 Mech. Interp. Workshop (poster)!

- May 2024: New paper on underspecification + LMs accepted to the Findings of ACL 2024; congrats to Frank Wildenburg, the master’s student who led this project!

- April 2024: I have received a Superalignment Grant from OpenAI! I’m very excited to work on improving our mechanistic understanding of how larger models’ capabilities differ from those of smaller models.

- March 2023: Along with Jaap Jumelet, Hosein Mohebbi, Afra Alishahi, and Jelle Zuidema, I gave a tutorial on Transformer-specific Interpretability at EACL 2024, on March 24th! My session focused on circuits in pre-trained transformer language models; find the recording here.

- November 2023: I’ve been awarded a prize from CIMeC and the Fondazione Alvise Comel for being one of the top two master’s theses that connect AI and cognitive neuroscience!

- October 2023: New paper on transformer LMs’ animacy processing accepted to EMNLP 2023!

- September 2023: New paper on GPT-2’s partially generalizable circuit for greater-than has been accepted to NeurIPS 2023!

- January 2023:

- September 2022: I graduated from the University of Trento (Cognitive Science) and Charles University in Prague (Computer Science), as part of the EM LCT dual-degree master’s program! My thesis, supervised by Roberto Zamparelli and David Mareček, is available here.

- August 2022: New paper at COLING 2022!

- November 2021: New papers at WMT 2021 and BlackboxNLP 2021!

- June 2020: I graduated from the University of Chicago (CS and Linguistics)! I wrote an honors thesis on interpreting variational autoencoders for sentences, advised by Allyson Ettinger.

Selected Publications

For a full list, see Publications or my Google Scholar.

Michael Hanna, Yonatan Belinkov, and Sandro Pezzelle. 2025. Are Formal and Functional Linguistic Mechanisms Dissociated in Language Models?. To appear in Computational Linguistics. (Computational Linguistics)

Michael Hanna* and Aaron Mueller*. 2025. Incremental Sentence Processing Mechanisms in Autoregressive Transformer Language Models. In the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics. (NAACL 2025)

Curt Tigges, Michael Hanna, Qinan Yu, Stella Biderman. 2024. LLM Circuit Analyses Are Consistent Across Training and Scale. In the Thirty-eight Conference on Neural Information Processing Systems. (NeurIPS 2024)

Michael Hanna, Sandro Pezzelle, and Yonatan Belinkov. 2024. Have Faith in Faithfulness: Going Beyond Circuit Overlap When Finding Model Mechanisms. In the First Conference on Language Modeling (COLM). (COLM 2024)

Michael Hanna, Yonatan Belinkov, and Sandro Pezzelle. 2023. When Language Models Fall in Love: Animacy Processing in Transformer Language Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP). (EMNLP 2023)

Michael Hanna, Ollie Liu, and Alexandre Variengien. 2023. How does GPT-2 compute greater-than?: Interpreting mathematical abilities in a pre-trained language model. In the Thirty-seventh Conference on Neural Information Processing Systems. (NeurIPS 2023)

Michael Hanna, Roberto Zamparelli, and David Mareček. 2023. The Functional Relevance of Probed Information: A Case Study. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics, pages 835–848, Dubrovnik, Croatia. Association for Computational Linguistics. (EACL 2023)

Michael Hanna and David Marecek. 2021. Investigating BERT’s Knowledge of Hypernymy through Prompting. In Proceedings of the Fourth BlackboxNLP Workshop on Analyzing and Interpreting Neural Networks for NLP. Punta Cana, Dominican Republic. Association for Computational Linguistics. (BlackBoxNLP 2021)

Academic Interests

My main research interest, interpretability in the context of modern language models, is twofold. First, I’m interested in asking “What are the abilities of these models?” from a perspective informed by linguistics and cognitive science. In this so-called behavioral paradigm, I study these models on a pure input-output level, leveraging the wide body of psycholinguistic research conducted on humans. Second, I’m interested in answering “How do these models achieve such impressive performance on linguistic tasks?”, using techniques from (mechanistic) interpretability. I use causal interventions to perform low-level studies of language models, uncovering the mechanisms that drive their behavior.

Personal Interests

- Living Abroad: I’ve been able to spend time living in a variety of foreign countries through various different programs, including Spain (SYA); Korea (NSLI-Y, FLAG); Czechia and Italy (LCT); and the Netherlands and (soon) Israel (ELLIS). If you’re in high school or university and want to know more about opportunities like this, shoot me an email—I’m happy to share more info about programs that will let you live abroad, oftentimes for free!

- Urbanism: I’m fascinated by the way that our built environment affects our lives. I’m a big fan of walkable neighborhoods, public transportation, and the power of well-designed spaces to bring people together.

- Vocal Performance: During my undergrad, I was a baritone in Run for Cover, an a cappella group at UChicago. Check us out on Spotify or iTunes!